Friday, February 6, 2026

10:20 – 11:10 a.m. in ETB 1020

Dr. Ayobami S. Edun

Senior Assistant Vice President

Title: “AI in Highly Regulated Environments: From Data Integrity to Explainability, Benchmarking, and Model‑Risk Management”

Abstract:

AI systems in highly regulated environments must deliver reliability, transparency, and operational safety under demanding real‑world conditions. Particularly, AI models for fraud detection face extreme class imbalance, delayed ground‑truth labels, adversarial behavior, and continuous data drift—making rigorous validation both technically challenging and operationally essential. This seminar presents a reproducible, regulator‑aligned framework for assessing high‑risk AI/ML models, emphasizing data integrity checks, drift diagnostics, and performance stability testing. I will show how surrogate and offset models reconstruct global model behavior when vendor transparency is limited, how segment‑level diagnostics expose under‑performance, and how sensitivity and robustness analyses stress‑test stability under realistic perturbations. The talk concludes with practical strategies for automated validation pipelines, alert‑rate governance, and compensating controls that preserve model reliability while supporting frontline operations. The overarching goal is to illustrate how disciplined validation and model‑risk management enable trustworthy, compliant AI in tightly governed domains.

Biography:

Ayobami S. Edun, Ph.D., is a Senior Assistant Vice President focused on validating AI and machine‑learning systems used for fraud detection in highly regulated financial environments. His work spans statistical testing, model explainability, adversarial robustness, and regulatory compliance, with emphasis on developing reproducible and audit‑ready validation pipelines. He regularly leads the validation of high‑risk vendor and in‑house models, including neural‑network‑based real‑time fraud detectors and dynamic, frequently retrained systems whose opacity and operational constraints require surrogate modeling and automated testing frameworks.

Dr. Edun holds a Ph.D. in Computer Engineering and works extensively with Python, Spark, and secure MLOps tooling to enable robust, large‑scale evaluation of model behavior. His research interests include explainable AI, stress testing of ML systems, benchmarking under distribution shift, and the intersection of AI governance with statistical methodology.

Friday, January 23, 2026

Friday, January 23, 2026

In conjunction with the ECE Leaders & Innovators:

In conjunction with the ECE Leaders & Innovators:

Friday, March 25, 2025

Friday, March 25, 2025

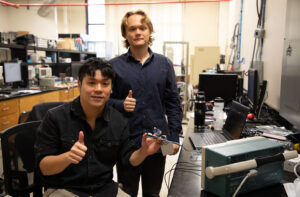

Congratulations to our May 2023 Doctoral Graduate Sambandh Dhal for The Association of Former Students Association of Former Students Distinguished Graduate Excellence in Research Doctoral Award!

Congratulations to our May 2023 Doctoral Graduate Sambandh Dhal for The Association of Former Students Association of Former Students Distinguished Graduate Excellence in Research Doctoral Award!

Thank you advisors Dr. Ulisses Braga-Neto and Stavros Kalafatis!

Thank you advisors Dr. Ulisses Braga-Neto and Stavros Kalafatis!